Balancing the Load Secrets to Scalable Web Applications

10 min to read

3/4/2024

Discover the secrets behind scalable web applications in "Balancing the Load Secrets to Scalable Web Applications." This guide delves into the world of load balancing, revealing how it ensures seamless performance and high availability for major web platforms. Learn about the algorithms and techniques that distribute traffic efficiently across servers, keeping your applications responsive and reliable under heavy demand.

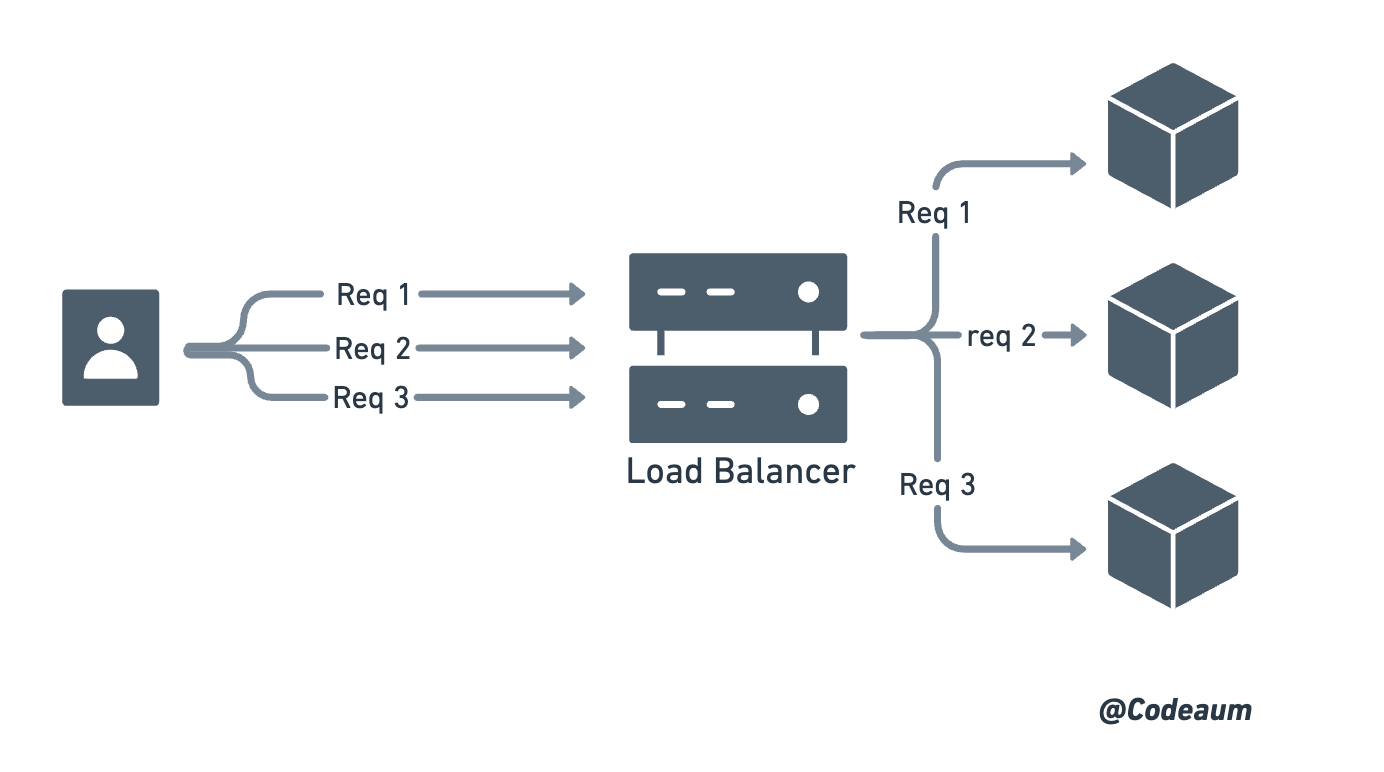

What is Load Balancer ?

A load balancer is a critical component in modern web architecture, designed to efficiently distribute incoming network traffic across multiple servers. Its primary goal is to ensure that no single server becomes overwhelmed with too many requests, which can lead to slow response times or even server crashes.

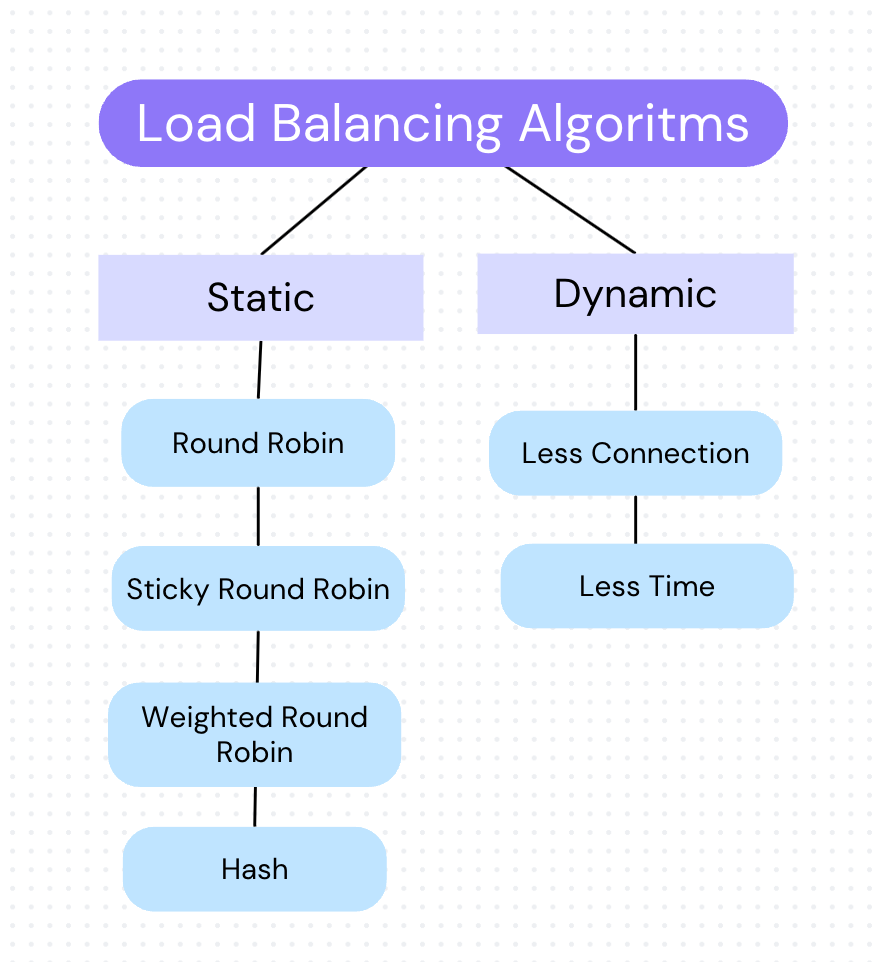

Load balancing algorithms are generally 2 types

- Static → Static load balancing algorithms distribute tasks across servers based on predefined rules, without considering the current server load or real-time performance metrics.

- Dynamic → Dynamic load balancing algorithms adapt to real-time conditions by continuously monitoring server performance metrics such as CPU usage, memory load, and network traffic.

Key Differences

| Aspect | Static Load Balancing | Dynamic Load Balancing |

|---|---|---|

| Decision Making | Predefined and does not change during execution | Adjusts based on current conditions and performance |

| Flexibility | Less flexible, as it doesn't adapt to changes | Highly flexible, adapts to real-time server loads |

| Performance | Does not consider real-time performance metrics | Considers metrics like CPU usage, memory load, etc. |

| Complexity | Simpler to implement | More complex due to continuous monitoring |

| Examples | Round-robin, Hash-based | Least Connections, Weighted Response Time |

Some important type of Load Balancing Algorithm:-

Static Type Algorithm

Round Robin

Round-robin is a simple and widely used load-balancing algorithm that distributes incoming requests evenly across a group of servers. In this method, the load balancer assigns each request to the next server in a sequential order, looping back to the first server after reaching the last.

For example, consider a web application with three servers: A, B, and C. The round-robin algorithm assigns the first request to server A, the second to server B, the third to server C, and the fourth back to server A, continuing this pattern for subsequent requests.

Advantage

-

- Simplicity- The round-robin algorithm is easy to implement and understand, requiring minimal configuration and no real-time monitoring of server performance.

-

- Fairness- It ensures equal distribution of requests across servers, making it suitable for environments with uniform server capacities and workloads.

Limitation

-

- Uneven Load Distribution - Round-robin does not consider the current load or processing power of each server, which can lead to uneven distribution.

-

- Lack of Fault Tolerance - If a server goes down, the basic round-robin algorithm continues to route requests to it unless additional mechanisms are in place to detect and bypass failed servers.

Sticky Round Robin

Sticky Round Robin is a variation of the round-robin load-balancing algorithm that ensures a users requests are consistently directed to the same server during their session. This is particularly useful for applications that maintain session state on individual servers.

For example, consider an e-commerce site with three servers: A, B, and C. A new visitor might be assigned to server A for their first request. With sticky round-robin, all subsequent requests from that user during the session will also be sent to server A, preserving session continuity.

Advantage

-

- Session Continuity- Sticky round-robin maintains user session consistency by directing all requests from a user to the same server, ensuring a seamless user experience.

-

- Stateful Applications- It is ideal for applications that store session-specific data on individual servers, as it prevents session data from being scattered across multiple servers.

Limitation

-

- Uneven Load Distribution - Sticky round-robin can lead to uneven load distribution if certain sessions are more resource-intensive, causing some servers to become overloaded while others remain underutilized.

-

- Scalability Issues - Adding or removing servers can disrupt the assignment of sessions.

Weighted Round Robin

Weighted Round Robin is an enhanced version of the round-robin load-balancing algorithm that assigns different weights to servers based on their capacity and performance. Each server is given a weight, reflecting its processing power or resource availability, allowing more capable servers to handle a larger share of requests.

For example, if there are three servers with weights 3, 2, and 1, respectively, the server with weight 3 will receive three requests for every two and one that the others receive. This approach ensures a more efficient and balanced distribution of workloads.

Advantage

-

- Resource Utilization- Weighted round-robin allocates more requests to servers with higher capacity, optimizing resource utilization by leveraging each server's strengths.

-

- Customizable Distribution- Administrators can tailor request distribution based on server capabilities, improving performance by assigning more traffic to more powerful servers.

Limitation

-

- Complex Configuration- - Assigning appropriate weights to servers requires careful analysis and manual configuration which can be complex and time-consuming.

-

- Resource Changes- Weighted round robin does not automatically adjust to real-time changes in server performance or availability, potentially leading to inefficiencies if server loads fluctuate

IP/URL

IP/URL hash load balancing is an algorithm that directs incoming requests to servers based on a hash of the client's IP address or the requested URL. This method creates a consistent hash value, which is then mapped to a specific server.

Dynamic Type Algorithm

Least Connection

The Least Connections load balancing algorithm distributes incoming requests to the server with the fewest active connections at the time of the request. This approach ensures that each server handles an appropriate amount of traffic based on its current load, making it particularly effective for environments where requests have varying processing times and resource requirements.

For instance, if three servers are running and one server has significantly fewer connections, the next incoming request will be routed to that server, ensuring efficient handling and reduced latency.

Advantages:

-

- Dynamic Load Distribution- Least connection efficiently balances traffic by considering real-time server loads, directing new requests to the least busy servers.

-

- Optimized Resource Usage- By minimizing server congestion, it enhances response times and overall application performance.

Limitations:

-

- Initial Connection Surge- New servers can be overwhelmed if they start with zero connections, leading to an uneven distribution initially.

-

- Complexity- Requires constant monitoring of active connections, increasing computational overhead and complexity compared to simpler algorithms.

Least Time

The Least Time load balancing algorithm routes incoming requests to the server with the lowest estimated response time. This estimation considers both the server's active connection count and the average response time of recent requests.

For example, if two servers have similar connection counts, but one server has a faster average response time, the next request will be directed to the quicker server.

However, it requires constant monitoring which incurs significant overhead and introduces complexity

Advantages:

-

- Optimized Response Times- Least time prioritizes routing requests to servers with the fastest response times, improving overall user experience and reducing latency.

-

- Efficient Load Balancing- By considering current loads and response metrics, it dynamically balances traffic to prevent server overloading.

Limitations:

-

- Resource Intensive- Requires continuous monitoring of server performance and response times, increasing computational complexity and overhead.

-

- Variable Metrics- Fluctuations in response times can lead to inconsistent routing decisions, potentially affecting load distribution stability.